When AI Shifts Control

As AI becomes more embedded in everyday products, something subtle changes in the user experience — often without being explicitly designed for.

At first, AI systems feel helpful. They recommend, predict, prioritize, and decide faster than we can. They reduce effort and remove uncertainty.

But over time, I've noticed a quieter shift.

Users move from making decisions to confirming them.

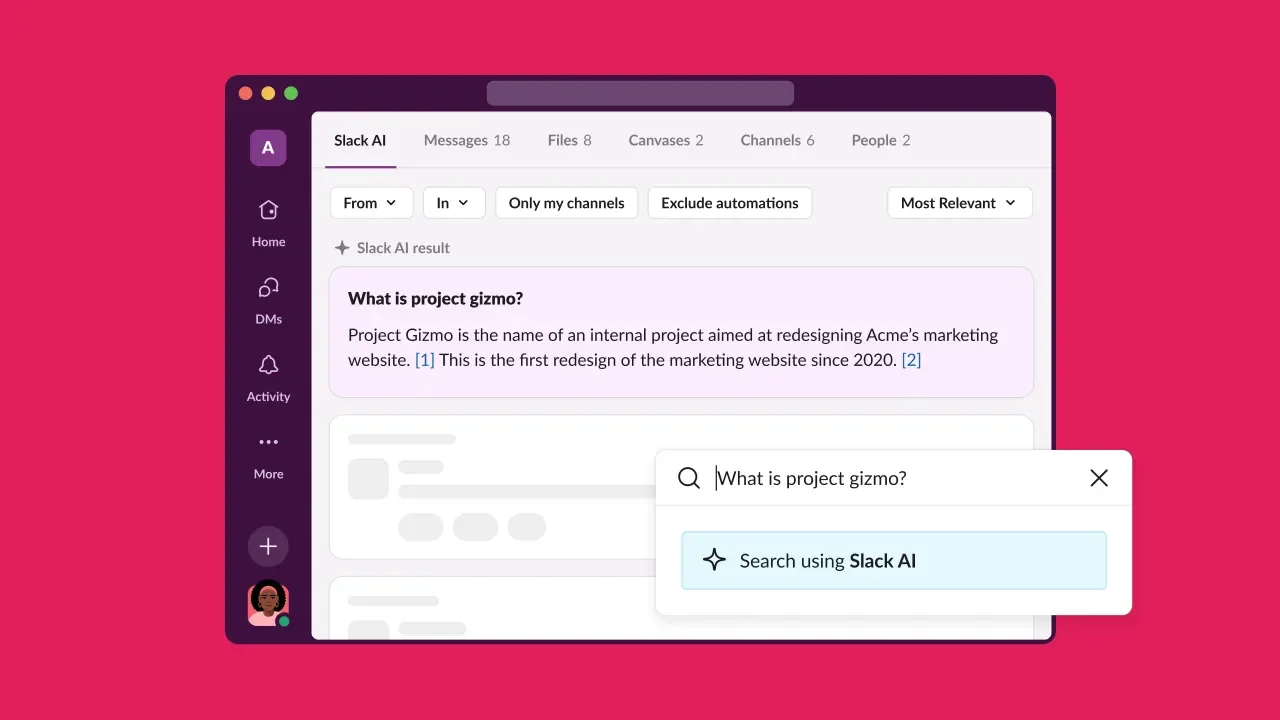

Take LinkedIn's job recommendations, Gmail's suggested replies, or Slack's AI-generated message summaries. In each case, the AI presents a choice. The user's role becomes simpler: yes or no.

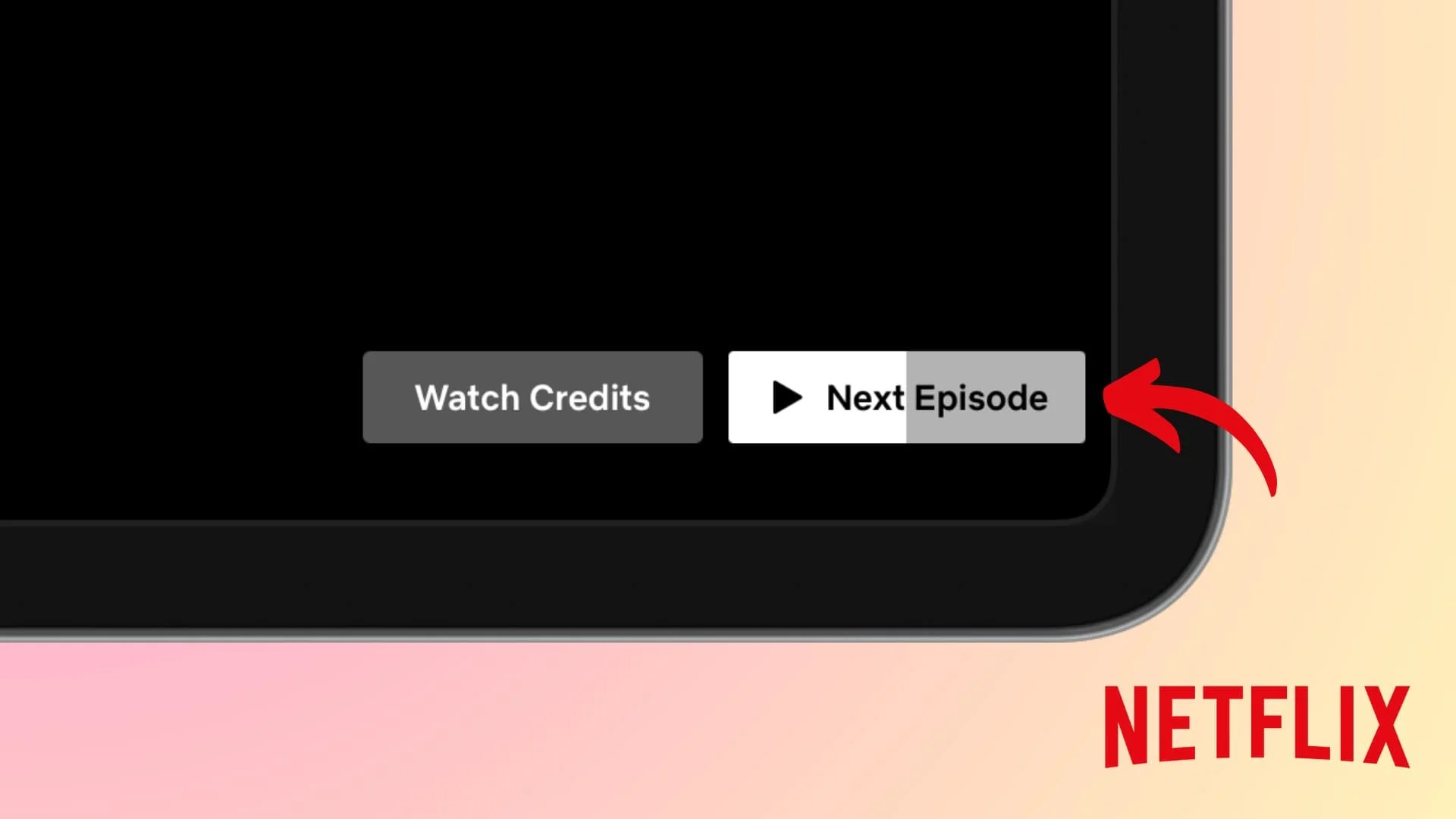

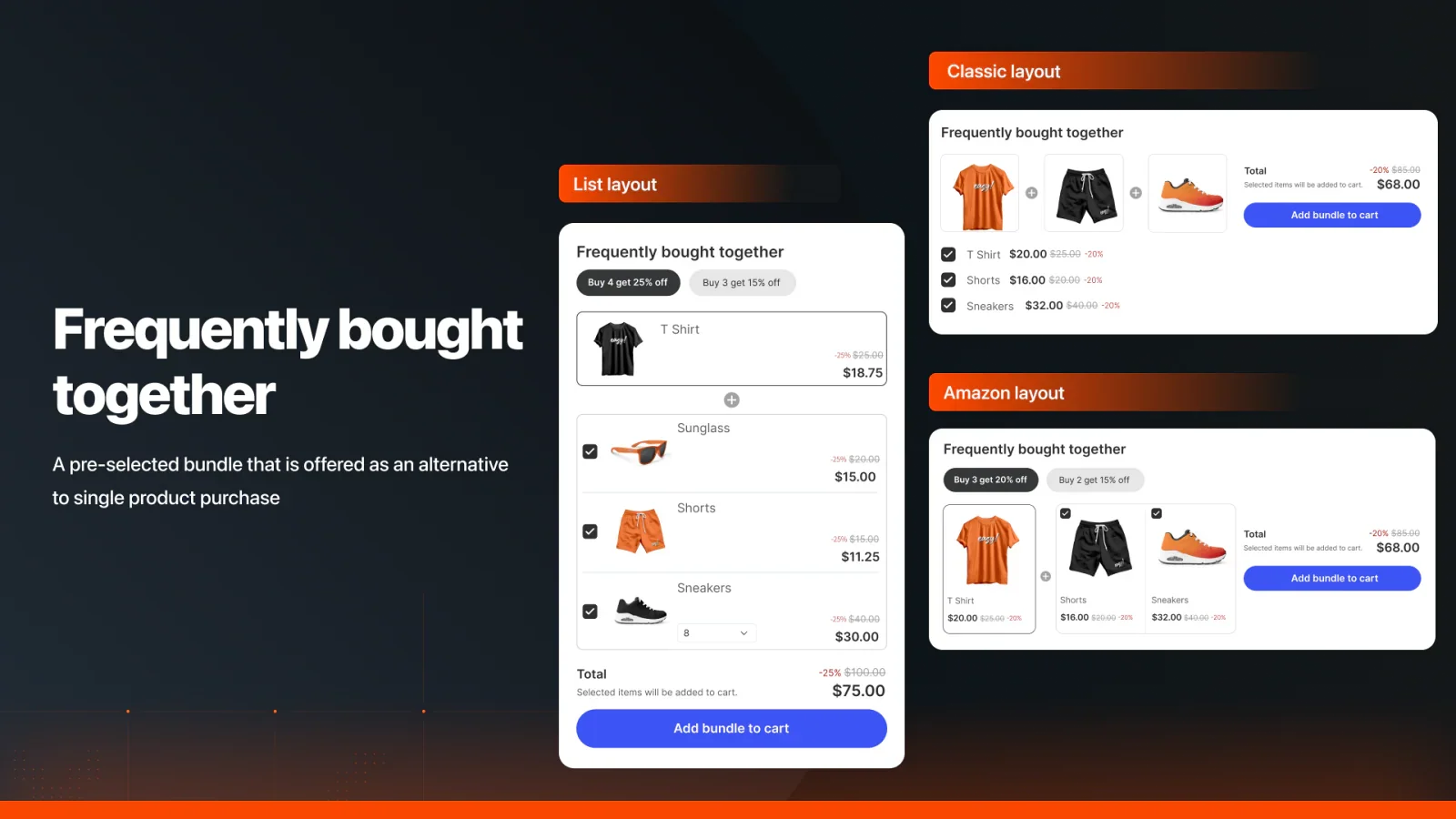

Everyday AI systems that shift decision-making from creation to confirmation

The system suggests an action. The user clicks "Approve." And over time, judgment takes a back seat to confidence scores.

This is where psychology becomes useful.

There's a concept called locus of control — the sense of whether outcomes are driven by our own actions or by external forces. When users feel in control, they tend to feel more engaged, more responsible, and more satisfied with outcomes.

AI systems often shift this balance.

Not aggressively. Not all at once. But incrementally.

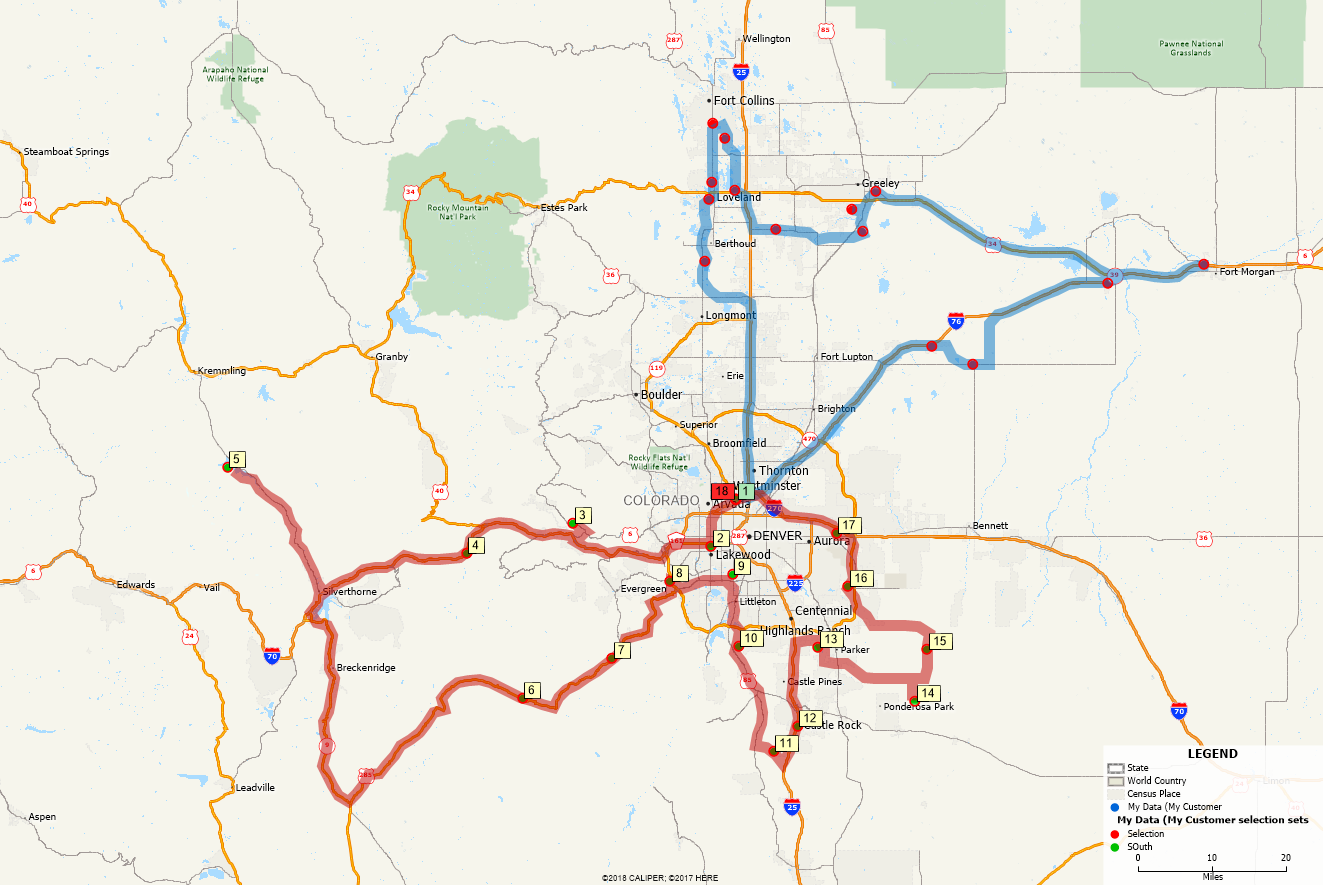

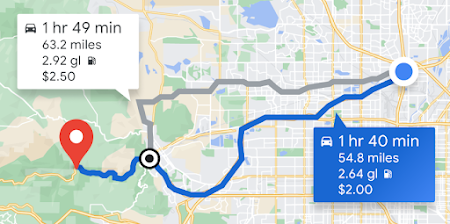

From exploring routes (left) to following instructions (right)

When systems always "know better," users begin to trust the system instead of their own reasoning. That can feel efficient — until something goes wrong.

When errors happen, users are often held accountable for decisions they didn't fully feel they made. And that disconnect erodes trust, not just in the system, but in the experience as a whole.

This shows up in the data, too. When users feel powerless, they don't leave immediately — they just stop caring. Engagement drops. They use features less. They switch to competitors when something better appears, because there's no sense of investment or ownership.

What's interesting is that dissatisfaction doesn't always come from bad outcomes.

It comes from loss of agency.

People want to feel involved in decisions that affect them. They want to understand why something is happening. And they want the option to disagree — even if they don't use it often.

How I Think About This

When I'm designing with AI, I ask:

"What happens if the system gets this wrong?"

If the answer is "nothing much" — automate it. Suggesting a playlist? Fine. The stakes are low.

But if the wrong decision wastes someone's time, money, or trust? That's where users need to stay in the loop.

Hiring decisions. Financial approvals. Content moderation. These shouldn't feel like they're happening to someone.

I also look for decisions that teach something. When systems do everything, users stop learning. They lose skills they might need when the AI isn't available. Navigation is the obvious example, but it's true in creative tools too — if the AI always suggests the layout, designers stop developing their own instincts.

The goal isn't to avoid automation. It's to automate in ways that make people feel capable, not dependent.

Small moments where control quietly shifts from user to algorithm

This creates a real design challenge.

Automation improves efficiency. Agency sustains trust.

Designing AI experiences isn't about choosing one over the other. It's about deciding where humans should stay in control, and where systems should step in quietly.

Because the most successful AI products don't just optimize results.

They preserve the feeling: "I had a say in this."

The question isn't whether to use AI in our products.

It's whether we're designing systems that still need people — not just to click "Approve," but to think, choose, and sometimes disagree.

Because the moment users stop feeling necessary, they stop feeling engaged.

And that's when they start looking for something else.

What's your experience? Do you feel more in control or less with AI-powered products? I'd be curious to hear your perspective.