2025

HPE: From Menu-Driven to Intelligent Conversations

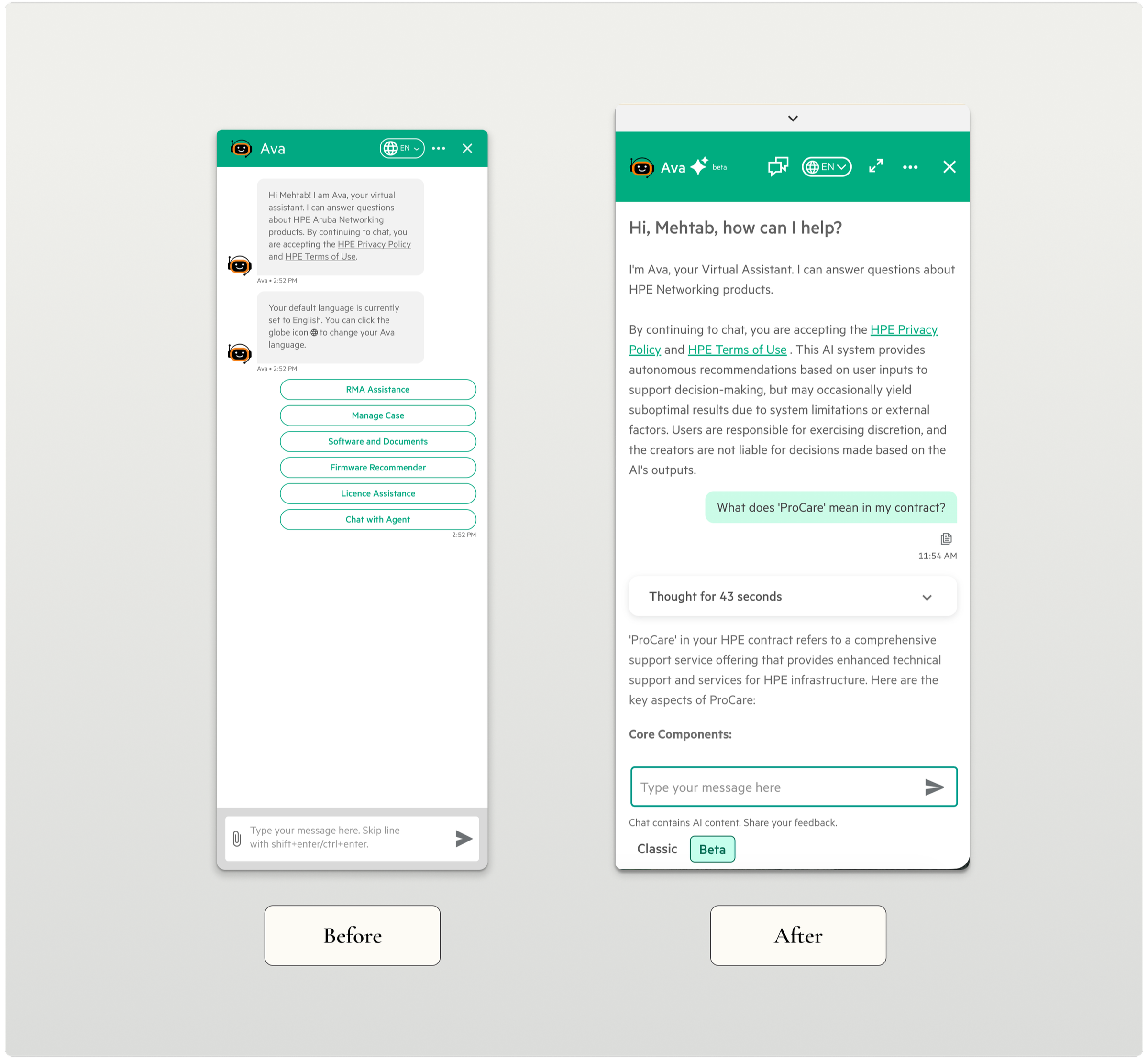

HPE's chatbot had a 78% escalation rate—users would try it, get frustrated with menus, then call an agent. I redesigned it to prioritize transparency over automation, reducing escalations to 46% in the first month.

Role: Senior Product Designer

Duration: 3 weeks

Scope: End-to-end conversational experience design (desktop, tablet, mobile)

Collaboration: Lead UX Designer, Product Manager, Engineers

🎯 The Problem

A network admin asks: "Which of my devices have expired contracts in the APJ region?"

The old chatbot response:

Click "Asset Management"

Click "Contracts"

Click "View All Documentation"

Here are 15 articles and videos

(User gives up and calls an agent)

What should take 10 seconds took 10+ minutes—if users bothered at all.

Why it failed:

Rigid menu navigation

Couldn't read or explain documentation

Generic link dumps instead of answers

No transparency about limitations

One network admin told us: "I don't even click the chatbot icon anymore. It's faster to just call support."

👥 The Users

Customer Success Managers, Network Administrators, Root Account Managers

Technical professionals managing enterprise networking who need quick answers while troubleshooting live issues.

Key insight: When networks are down, seconds matter. These users won't navigate menus—they ask questions naturally and expect immediate answers. When they can't get them, they call agents directly.

⚖️ The Design Challenge

Users wanted instant answers but didn't trust AI to provide them.

With 3 weeks to ship and imperfect AI capabilities, we couldn't guarantee 100% accuracy. The temptation was to hide limitations and make the AI seem smarter than it was.

The insight: Users don't need perfect AI—they need control. If they can verify sources, see uncertainty, and escalate anytime, they'll trust the system enough to try it.

This reframed everything: instead of "make AI smarter," we focused on "make AI transparent."

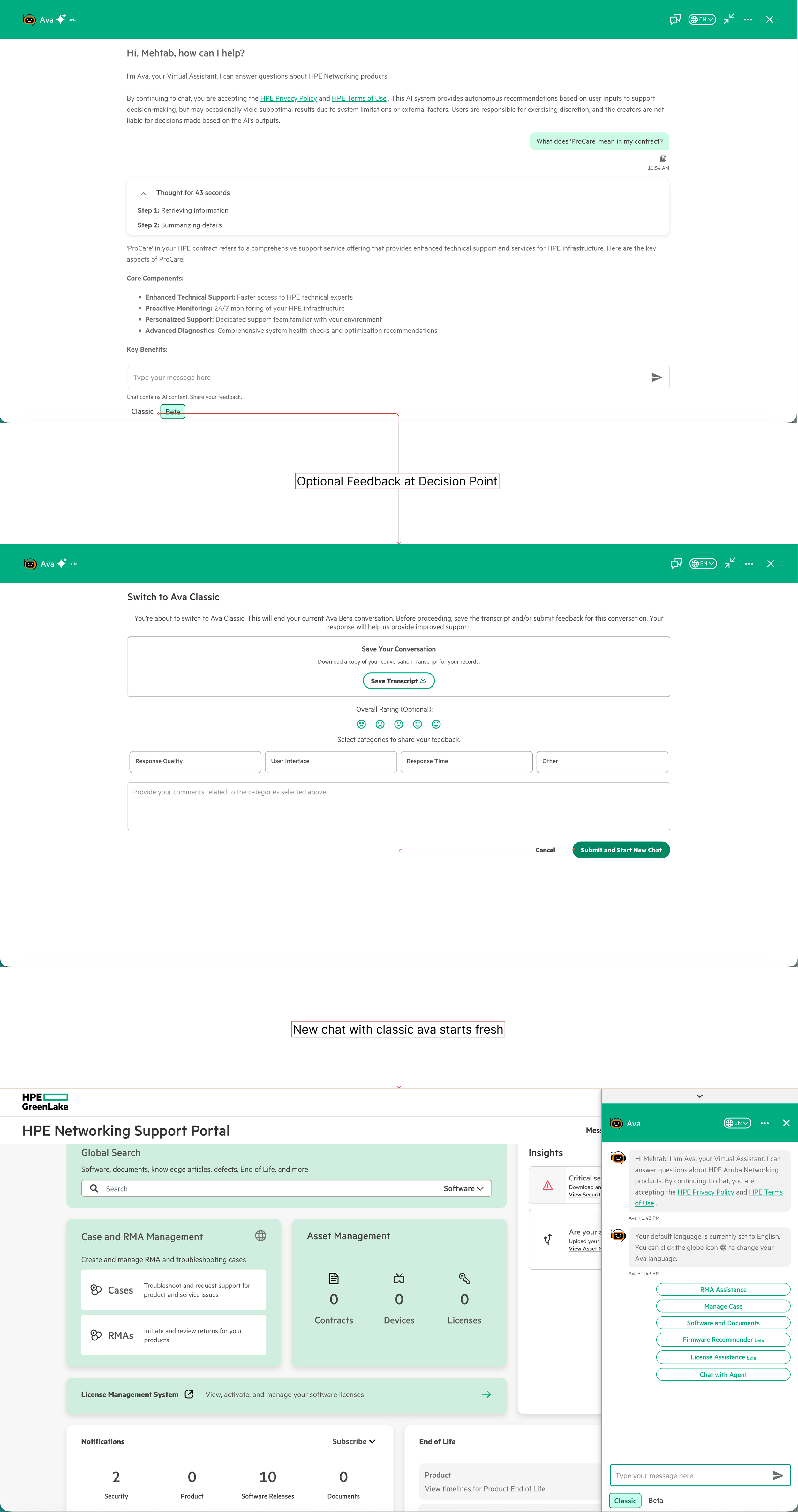

🔄 The Risky Decision: Give Users an Escape Route

The product team wanted to sunset the old menu-based chatbot entirely and force AI adoption.

I pushed back. Users who don't trust AI won't suddenly start trusting it because we removed their alternative—they'll just stop using the chatbot altogether.

What we did: Design a seamless toggle between "AI mode" and "Classic mode."

Thoughtful mode transitions with optional feedback and transcript download—respecting user choice while gathering insights

Why this worked:

The classic mode option felt risky to stakeholders—why give users an escape route? But it actually helped adoption by giving users control. When users know they can bail out, they're more willing to try. When they feel trapped, they resist.

The data benefit: Every mode switch included optional feedback, showing us when and why users lost trust.

What we included:

Optional feedback at decision point (captures "why" without forcing input)

Download conversation transcript (no data lost when switching)

Clean handoff (new chat starts fresh with clear context)

💬 The Solution: Show the AI's Work

Instead of hiding what the AI was doing, we exposed it.

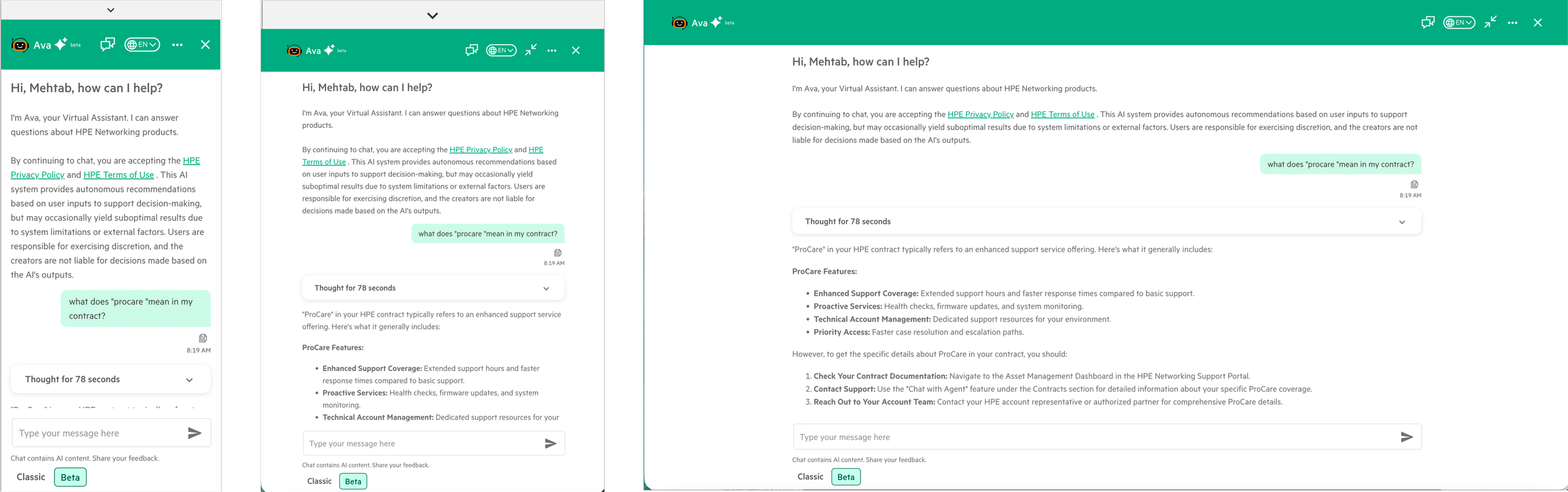

1. Ask Questions Naturally

Users were abandoning the chatbot after 2-3 clicks through menus. We removed menus entirely—letting them type questions like they'd ask a colleague.

This felt risky. What if the AI misunderstood? Testing showed users preferred occasionally rephrasing over clicking through 5 menu levels.

Natural conversation replaces rigid menus—personalized greeting, context awareness, and intelligent suggestions

How it works:

Plain language questions—no menu hunting

Context awareness (current account, page, previous conversation)

Personalized greetings and relevant follow-up suggestions

The shift: From "click the right sequence" to "ask what you actually want to know."

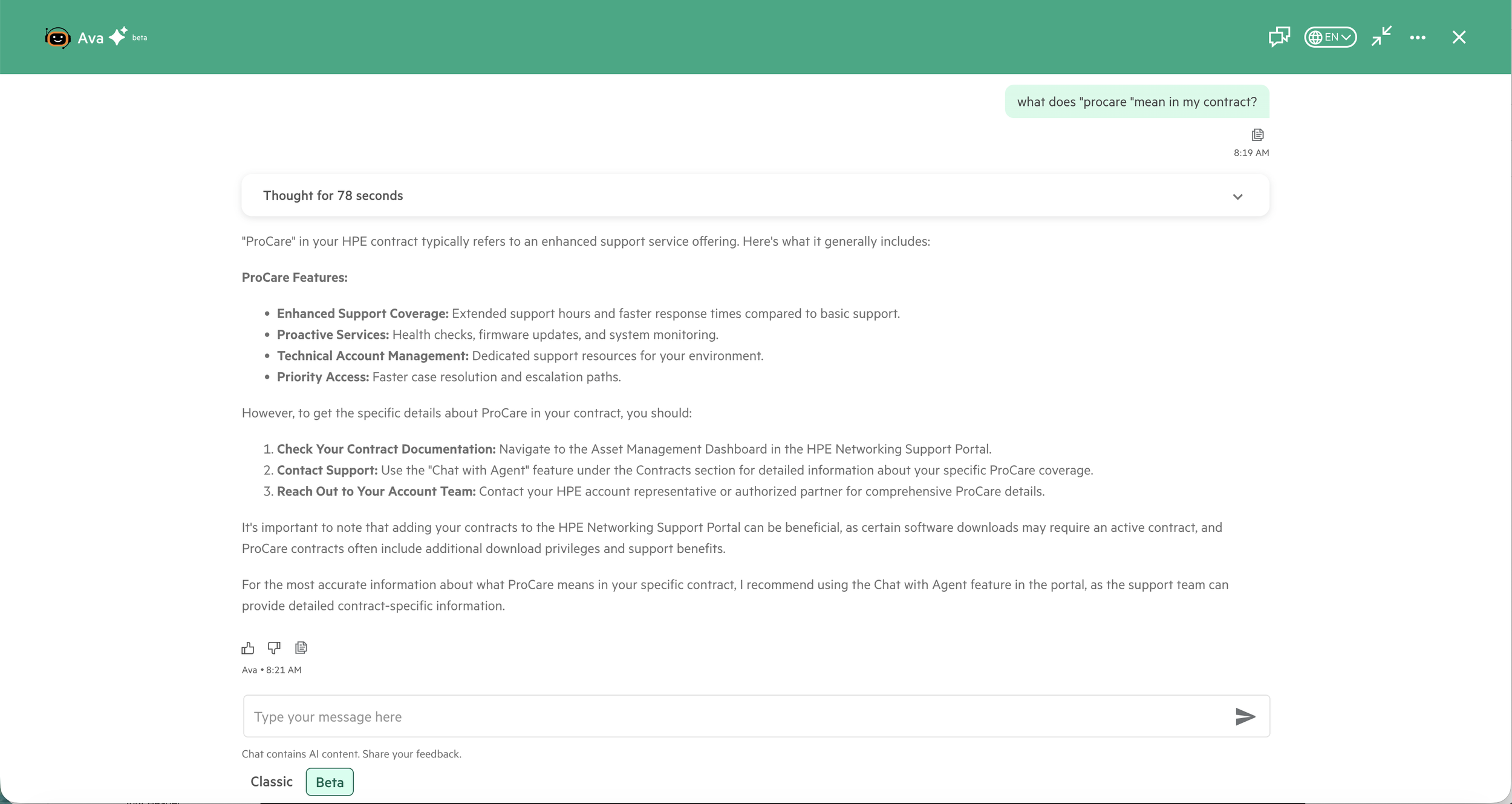

2. Read and Explain Documentation in Seconds

The old system linked to 50-page PDFs. Users had to download, search, and interpret on their own.

The new experience:

User: "What's covered under ProCare service?"

AI: [Shows processing indicator] "ProCare includes 24/7 phone support, advanced hardware replacement, and software updates. Source: ProCare Service Guide, page 12."

AI reads and explains documentation in seconds—no more hunting through 50-page PDFs for answers

Key elements:

Processing indicator reduces "black box" anxiety

Plain language explanations of complex technical docs

The trust mechanic: Users could verify every answer. This transparency increased trust, not decreased it.

3. Answer with Live Data, Not Links

The old chatbot could access account data—but only through menus. And it would dump links instead of giving direct answers.

The question: "Which of my devices have expired contracts in the APJ region?"

Old approach: Click through menus → Get links to documentation → Find answer yourself

New approach: Ask naturally → Get direct answer: "You have 3 devices with expired contracts in APJ: [device list]. Would you like to renew these contracts?"

The shift: From "here's where to look" to "here's your answer, and here's what you can do about it."

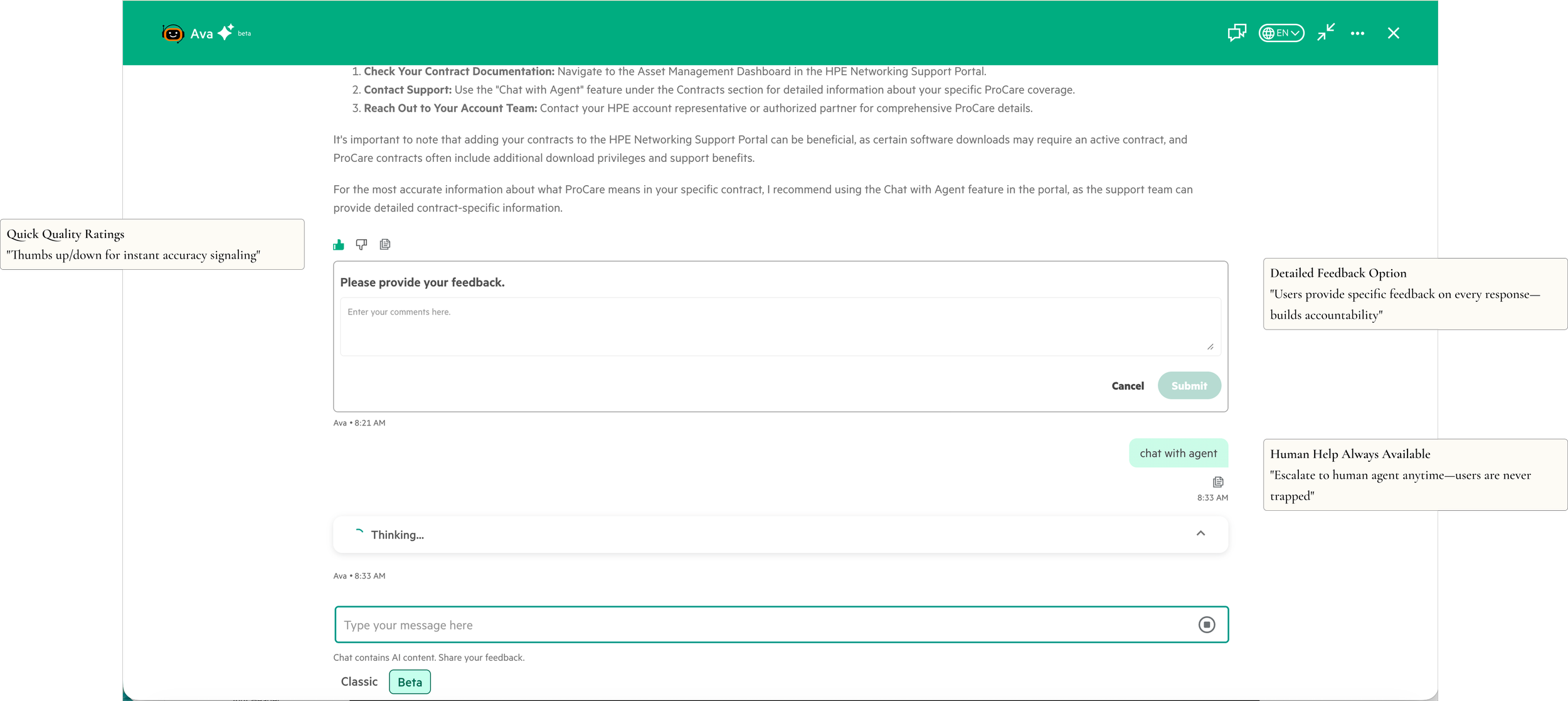

4. Trust Through Transparency

Every interaction reinforced: You're in control. We'll show our work. You can verify everything.

Trust through transparency—optional detailed feedback, quick ratings, and always-available human support

Design decisions:

Thumbs up/down on every response (quick feedback loop)

Optional detailed feedback (for users who want to explain)

Easy agent handoff (users can type "talk to agent" anytime)

When users know they can jump to a human whenever they want, they're more willing to give the AI a shot.

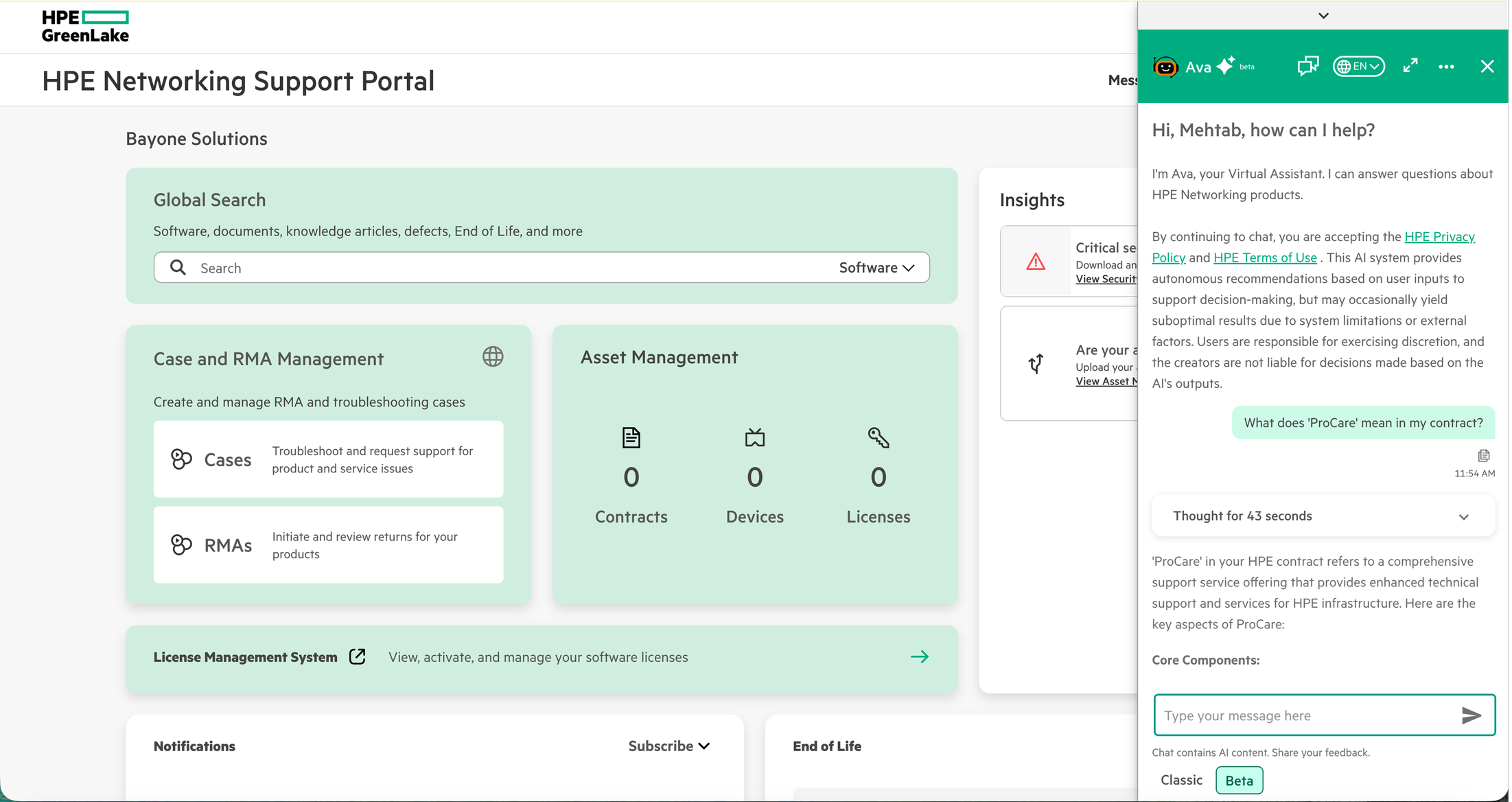

5. Make It Work on Mobile

Mobile and tablet adoption was nearly zero—users avoided the chatbot on smaller screens.

As part of HPE's broader mobile-responsive initiative, I designed the chatbot to work across all devices. This increased adoption and trust by meeting users where they actually work—whether troubleshooting on-site or managing accounts from a desk.

Network admins troubleshoot on-site from mobile while CSMs work from desktop—same experience optimized for each device

🧪 Validation & Iteration

Testing approach:

Ran 50 common queries across 3 user types. Measured accuracy: 78% correct on first attempt, 94% after user clarification.

Key discovery: Users trusted answers more when we showed a "processing" indicator vs. instant responses. Instant felt "too good to be true."

Rapid prototyping: Used Claude + Cursor to prototype conversational flows in hours instead of days, testing multiple patterns before committing to engineering.

Collaboration: I owned conversational design and interaction patterns; lead designer owned visual systems and component library integration. Worked closely with engineers to ensure features were technically feasible for the 3-week timeline. Some features were too complex to ship immediately, so we designed simpler V1 versions and moved advanced capabilities to V2.

Launch: Released as V1 MVP with core features. Additional capabilities planned for V2 based on usage data.

📊 Impact

User behavior:

32% reduction in escalations (78% to 46% in first month)

3x increase in chatbot usage among previous non-users

Users now start with chatbot instead of bypassing it

Business value:

~$150K projected annual savings from reduced agent workload

Resolution time dropped from 8+ minutes to under 2 minutes for routine queries

Delivered:

MVP in 3 weeks with seamless system integration

Gradual rollout with classic mode to make adoption easier

Data pipeline for continuous AI improvement based on feedback

💭 Reflections

The hardest part wasn't making it conversational—it was making it trustworthy

With 3 weeks and imperfect AI, we couldn't build the smartest chatbot. But we could build the most honest one.

Every trust feature was intentional: show sources so users can verify, show processing to reduce "black box" anxiety, show escape routes so they're willing to try, admit when uncertain so they trust you more later.

The "classic mode" option felt risky to stakeholders, but it taught us: users need control before they'll trust automation.

What worked:

Transparency over perfection (cite sources, show processing, admit limitations)

User control over forced adoption (classic mode, always-available agent handoff)

Rapid prototyping with AI tools to test conversational patterns quickly

What I'd do differently:

Establish baseline metrics earlier—we reconstructed from support logs post-launch instead of capturing clean pre-launch data

Test edge cases with users, not just internally—QA caught technical bugs; users would've caught trust issues faster

Design uncertainty as a first-class pattern, not an edge case—"I'm not sure, but here's what I found" should've been core

The biggest lesson: AI doesn't need to be perfect to be useful—it needs to be honest.

When users understand what the AI can and can't do, when they can check its work, and when they know they can bail out, they don't need 100% accuracy to give it a shot.

Design for control and transparency, not just smart responses.